What Are Algorithms and Are They Biased Against Me?

What Are Algorithms and Are They Biased Against Me?

(Bloomberg) -- Every minute, machines are shaping somebody’s future, as software decides which hospital patients should get extra monitoring or which credit card applicants get a thumbs-down. The hope was that programs combining objective criteria and mountains of data could be more efficient than humans while sidestepping their subjectivity and bias. It hasn’t worked out that way. Instead, the hospital program was found to underestimate the needs of Black patients, and the credit card software is being investigated after complaints that it discriminated against women. Algorithms, the logic at the heart of such programs, can replicate and even amplify the prejudices of those who create them.

1. What’s an algorithm?

A formula for processing information or performing a task. Arranging names in alphabetical order is a kind of algorithm; so is a recipe for making chocolate chip cookies. But they’re usually far more complicated. Companies such as Facebook Inc. and Alphabet Inc.’s Google have spent billions of dollars developing the algorithms they use to sort through oceans of information and zealously guard the secrets of their software. And as artificial intelligence makes these formulas ever more complex, in many cases it’s impossible to know how decisions are being reached. A prominent AI researcher at Google was recently fired after she resisted the company’s calls to retract a research paper that pointed out concerns about the technology the company uses.

2. How does bias creep in?

In any number of ways: People making a program can introduce biases, or the algorithms can “learn” bad behavior from training data before launch or from users afterward, causing results to warp over time. Software engineers can inadvertently discriminate against people. Facebook was embarrassed in 2015 when some Native Americans were blocked from signing up for accounts because their names — including Lance Browneyes and Dana Lone Hill — were judged fake. And Amazon.com Inc. ran into problems when an AI system it was testing to screen job applicants “taught” itself to weed out women. In other cases, algorithms may have been trained on too narrow a slice of reality.

3. How is representation a problem?

Insufficiently diverse datasets can cause biased outcomes, as when facial recognition programs trained on too few images of people with darker skin make more errors when classifying non-White users. An MIT study found that inadequate sample diversity undermined recognition systems from IBM, Microsoft and Face Plus Plus. Darker-skinned women were the most misclassified group, with error rates around 35%, compared with a maximum error rate for lighter-skinned men of less than 1%.

4. How else do algorithms go wrong?

One other way is through misleading “proxy data.” The term refers to the way algorithms use scattered bits of data to characterize people. For instance, it’s illegal in the U.S. to make hiring or lending decisions based on race, gender, age or sexual orientation, but there are proxies for these attributes in big datasets: The music you stream on YouTube suggests your age, while membership in a sorority gives away your gender; your address may hint at your racial or ethnic heritage.

5. How does that lead to discrimination?

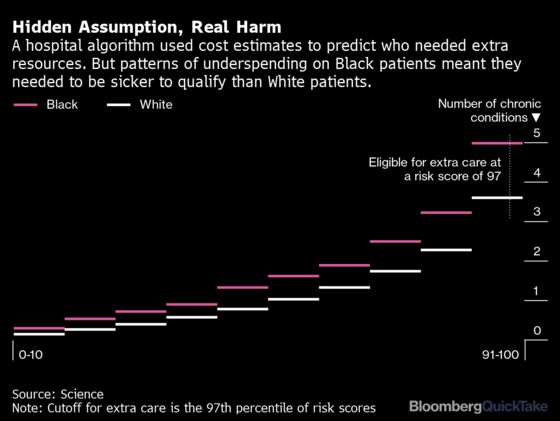

The hospital algorithm used an estimate of cost of care to identify high-risk patients in need of extra attention. But historically in the U.S., less is spent on average on Black patients than on their White counterparts. As a result, Black patients needed to be sicker to qualify under the algorithm, according to a study led by researchers from the University of California, Berkeley and the University of Chicago. Other problems involve readings of the past that produce biased predictions of the future.

6. How so?

Consider online job-finding services, which researchers have shown are less likely to refer women and people of color for high-paying positions because those job seekers don’t match the typical profile of people in those jobs — mostly White men. Systems like these use a technique called “predictive modeling” that makes inferences from historic patterns in data. They can go astray when data is inaccurate or misused. One study found that systems that used algorithms to decide who got loans were 40% less discriminatory than face-to-face interactions but still charged higher interest rates to Black and Latino borrowers than was justified by their risk of default. It replicated one form of unethical price gouging by charging higher rates to customers who lived in areas where people had fewer opportunities to shop around for better deals than people who lived elsewhere.

7. How does bias get amplified in algorithms?

Software can echo or compound stereotypes. The city of Chicago announced plans in 2017 to employ “predictive policing” software to assign additional officers where they were most needed. The problem was that the model reflected existing racial disparities in enforcement and therefore directed resources to neighborhoods that already had the largest police presence — in effect, reinforcing the existing human biases of the cops. Similar problems have surfaced with programs that evaluate criminals. Police in Durham, England used data from credit-scoring agency Experian, including income levels and purchasing patterns, to predict recidivism rates for people who had been arrested. The results suggested, inaccurately, that people from socio-economically disadvantaged backgrounds were more likely to commit further crimes.

The Reference Shelf

- The Regulatory Review: How can we reveal bias in computer algorithms?

- Medium: Algorithms are not inherently biased.

- Ars Technica: Yes, “algorithms” can be biased. Here’s why.

- ProPublica: How we analyzed the COMPAS recidivism algorithm.

- Bloomberg Opinion’s Cathy O’Neil warns about the problems of biased algorithms.

- “Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy,” by Cathy O’Neil (Penguin Random House)

- MIT Technology Review: How our data encodes systemic racism.

--Ali Ingersoll contributed to an earlier version of this article.

©2020 Bloomberg L.P.