(Bloomberg Opinion) -- What is an autonomous car — and more to the point, has Uber Technologies Inc. been operating them at all?

From its public statements, you’d certainly think that its vehicles can more or less drive themselves, with humans required only as a safety back-up while the system is in trial mode. Take this video from Uber’s Advanced Technologies Group, posted in April 2017, showing the company’s self-driving cars cruising smoothly around Pittsburgh. Or this one from October 2017: “We have hundreds of self-driving vehicles out in the world,” the narrator says. The car’s autonomous systems “make sure the vehicle’s aware of everything around it, like the stop sign up ahead, that woman crossing the street, and the cyclist coming up behind them.”

The preliminary report released Thursday by the U.S. National Transportation Safety Board into a fatal Uber crash in Tempe, Arizona casts those statements in an awkward light. As it turns out, while the vehicle was indeed aware of its surroundings, it was programmed to not translate that awareness into action, or even communicate the information to the human driver.

As described in the four-page report, the automated braking that might have prevented the death of pedestrian Elaine Herzberg had been switched off “to reduce the potential for erratic vehicle behavior.” Such functions were delegated to the driver, who was simultaneously responsible for preventing accidents and monitoring the system’s performance.

The word that jumps out is “erratic.” That shouldn’t be a surprise for anyone who’s been involved in designing autonomous vehicles. The safety-first approach that AV developers should rightly be adopting when dealing with hazards will naturally turn up a lot of false positives.

Self-driving systems tend to have trouble distinguishing between a crumpled ball of paper and a rock, or a wind-blown plastic bag and a child chasing a ball. If they braked for every object identified as a potential hazard, they’d risk being rear-ended almost constantly — one of the biggest problems that Alphabet Inc.’s Waymo has tried to overcome.

Still, self-braking technology is among the most basic functions to be expected from a vehicle that bills itself as autonomous. Honda Motor Co. first put out cars with a version of this feature in 2003. The Volvo SUV involved in the Tempe crash had it as a standard feature, though Uber had, as we saw above, disabled it.

Under the zero-to-five level system of grading self-driving capability developed by the Society of Automotive Engineers, a car that lacks this functionality would be classified as a two at best — far from the level three or four suggested by Uber’s videos, let alone the level-five Holy Grail of true autonomy that no company has yet reached.

Uber has initiated a review of its self-driving program and brought in a former NTSB chairman to examine its safety culture since the Tempe incident in March, according to a company spokesperson. That’s welcome — and to be sure, Uber isn’t the only one with issues around how it represents its driving systems.

The Center for Auto Safety and Consumer Watchdog this week called on the U.S. Federal Trade Commission to investigate what they called “dangerously misleading and deceptive advertising and marketing practices and representations” related to Tesla Inc.’s Autopilot feature.

Even with the companies whose performance look closest to camera-ready such as Waymo and General Motors Co., there’s a frustrating lack of detail. Unlike city streets, highways have been carefully designed to minimize the risk of accidents with divided lanes, grade-separated junctions, and minimal stops. Completing 1,000 autonomous miles on such a roadway without incident may well be easier than covering a mile in the city center.

Still, the difference between an AV in which the human operator is a rarely used emergency back-up and one in which they’re a fundamental safety feature is enormous. Developers tend to suggest their cars are the former — but in Uber’s case at least, they were clearly the latter.

The risk is that AV developers are essentially selling a version of the Mechanical Turk, the 18th-century chess-playing automaton that wowed Napoleon Bonaparte and Benjamin Franklin but ultimately turned out to be operated by a human concealed inside.

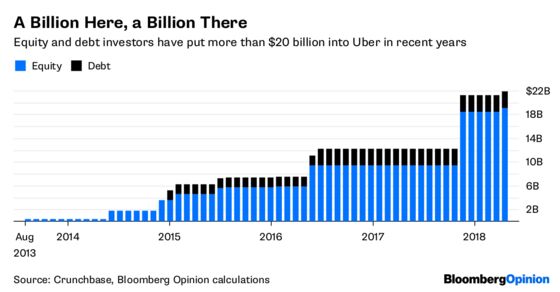

It’s great news that Uber finally turned a profit of sorts in its most recent quarter. But safety and securities regulators are entitled to expect that companies purporting to be months away from offering commercial autonomous vehicles that depend on removing drivers from the equation should have something resembling that in the works.

To contact the editor responsible for this story: Matthew Brooker at mbrooker1@bloomberg.net

©2018 Bloomberg L.P.