Facebook Hobbled Team Tasked With Stemming Harmful Content

Facebook Hobbled Team Tasked With Stemming Harmful Content

(Bloomberg) -- Seven months ago, Mark Zuckerberg testified to Congress that Facebook Inc. spends billions of dollars to keep harmful content off its platform. Yet some of his own employees had already realized the inadequacy of their efforts to tamp down objectionable speech.

A trove of internal documents shows that Facebook’s Integrity team, the group tasked with stemming the flow of harmful posts, was fighting a losing battle to demote problematic content. The documents were part of disclosures made to the U.S. Securities and Exchange Commission and provided to Congress in redacted form by legal counsel for Frances Haugen, a former product manager who worked on the Integrity team before she left Facebook earlier this year. The redacted versions were obtained by a consortium of news organizations, including Bloomberg News.

Detailed reports show that the social media giant’s algorithms were geared toward keeping people on the platform, where their valuable attention is monetized by showing them ads. As early as 2019, employees working on integrity measures realized that their tools were no match for a system designed to promote content likely to keep people scrolling, liking, commenting and sharing. This internal battle played out across the News Feed, Facebook’s personalized home page fed by the company’s machine-learning algorithms.

The documents reveal that while Facebook publicly touted its investments in technology and engineers to keep harmful content off its platform, the company recognized internally that those efforts often failed and that proposals by employees to fix the problems by tweaking how Facebook’s algorithms decide which content to show were often stymied or even overruled.

Joe Osborne, a Facebook spokesman, said it’s not accurate to suggest that Facebook encourages bad content, and he cited external research that found that the platform isn't a significant driver of polarization.

“Every day our teams have to balance protecting the ability of billions of people to express themselves openly with the need to keep our platform a safe and positive place,” Osborne said in an emailed response to questions. “We continue to make significant improvements to tackle the spread of misinformation and harmful content.”

Civic Integrity

The Integrity team got its start in 2018 as Civic Integrity, which grew out of the product team responsible for building tools like election day reminders and voter resources. But even when Zuckerberg agreed to create the Integrity team, he initially didn’t give them more headcount, effectively forcing the small group to split its time between election-related tools and policing harmful political content, according to a former Facebook employee who participated in discussions about the team’s inception.

As the Integrity team grew to build the products responsible for policing other kinds of dangerous content, the documents show that it consistently faced high barriers to launch new initiatives and had to explain any potential impact on growth and engagement. They also found that their tools for detecting and dealing with offensive material weren’t up to the task.

A major problem for the team was that demoting harmful content even by 90%, according to an October 2019 report, wouldn’t dent the promotion of posts that in some cases were amplified hundreds of times by algorithms designed to boost engagement. The questionable content might still be one of the top items a user would see.

“I worry that Feed is becoming an arms race,” the report’s author said, lamenting how different teams wrote the code to design the system to act in certain ways, sometimes at cross purposes.

Another document from December 2019 recognized that Facebook is responsible for the way the way the automated ranking process orders content on the News Feed, and with it, the worldview of its users.

We have “compelling evidence that our core product mechanics, such as virality, recommendations, and optimizing for engagement, are a significant part of why these types of speech flourish on the platform,” the December 2019 document says. “The mechanics of our platform are not neutral.”

In public, Facebook’s executives, including Chief Executive Officer Zuckerberg, cast the platform as a public forum for free speech, rather than a publisher exerting editorial control via algorithms that determine which content will go viral. Internally, Facebook employees recognized that “virality is a very different issue from free speech, as implemented by the algorithms we have created,” according to the December document.

‘Divisive, Hateful Content’

Until 2009, the News Feed was a chronological display of news articles, photos and posts from friends. As the company grew, attracted more users and sold more ads, Facebook introduced algorithms to sort through larger volumes of content and re-order users’ experiences to keep them coming back to the platform. According to the December 2019 document, employees worried they bore more editorial responsibility for what people saw and what went viral, including “hate speech, divisive political speech, and misinformation.”

Changes to Facebook’s algorithm were cited in one of the eight SEC complaints filed by Haugen, who copied internal documents to back up her concerns about the company’s conduct. In a letter addressed to the SEC Office of the Whistleblower, Haugen said Facebook misled investors about an algorithm change to emphasize “meaningful social interactions,” such as comments and sharing, but ended up increasing “divisive, hateful content.”

The letter cites public statements by Zuckerberg to Congress and on earnings calls that, according to Haugen, are contradicted by internal documents highlighting the harms of this algorithm change.

Facebook’s SEC filings include disclosures about the company’s challenges, including keeping the platform safe, according to Osborne, the spokesman. He said Facebook is “confident that our disclosures give investors the information they need to make informed decisions.”

The SEC complaint includes a note from a departing employee reflecting on why the company doesn’t act on the serious risks identified internally. The employee describes repeatedly seeing promising interventions “prematurely stifled or severely constrained.”

Haugen said other solutions never advanced because they weren’t a priority for a company motivated by metrics geared toward growth. She said a data scientist with a proposed change to improve Facebook products long-term “might get discouraged from working on it because it’s not seen as an immediate impact, or something that can be accomplished and scaled within three or six months, and it gets dropped on the floor.”

The Integrity team underwent structural changes that added to the frustration that many members felt about their sisyphean task. In December 2020, after the U.S. presidential election but while misinformation was still swirling about the result, the Civic Integrity team was disbanded and folded into what became known as Central Integrity. Haugen, who had been part of the Civic Integrity team, said that’s when she became aware of Facebook’s “conflicts of interest between its own profits and the common good.”

“It really felt like a betrayal of the promises that Facebook had made to people who had sacrificed a great deal to keep the election safe, by basically dissolving our community,” Haugen told Congress.

Amplifying Information

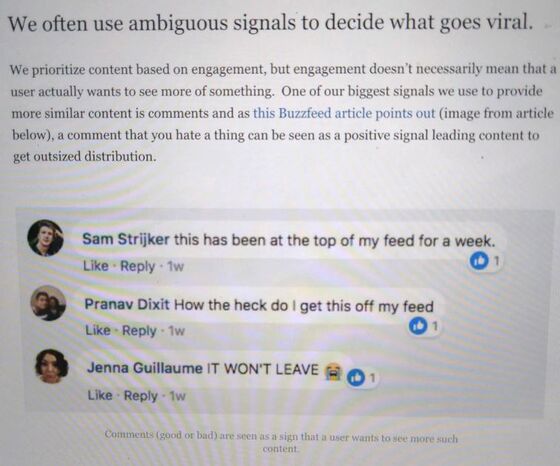

It’s not easy for software engineers to develop a system that will correctly identify and act on harmful information -- in English or in the roughly 140 other languages used on Facebook. Part of the problem is with the inputs that power the algorithms, which act on signals from users that can be ambiguous, or even contradictory.

One document gives the example of a person commenting “haha,” which could be a good reaction to a funny post, or a negative reaction to a post containing dubious medical advice. The algorithm doesn’t know the difference.

Facebook employees also highlighted the need for users to have a way to signal dislike without unknowingly amplifying the information. For example, a disapproving comment, or even an angry face emoji, boosts a post’s ranking, propagating its spread.

Facebook employees proposed another tool to address misinformation by demoting so-called deep reshares, or content that is reposted by a user who isn’t a friend or follower of the original poster. Those reshares were fueling false information on the platform, Facebook data showed.

The team estimated that demoting the reshares could have a significant effect on reducing misinformation. But Haugen said Facebook didn’t implement the proposal because it would have reduced the amount of content on the platform.

“Reshares are a crutch. They amplify the amount of content in the system. They come with a cost, but they’re a crutch that Facebook isn’t willing to give up,” she said in an interview with the media consortium.

Osborne, the Facebook spokesperson, said the company in rare cases reduces content that has been shared by people further removed from the original source. He said this intervention is used sparingly because it treats all content equally, affecting benign speech along with misinformation.

Facebook has made moves in recent years to give people more control over the content in their News Feeds. The company says it now has an easier way for users to say they want more recent posts, rather than machine learning-ranked content, and people can mark some friends or sources of information as favorites.

‘A Hundred Percent Control’

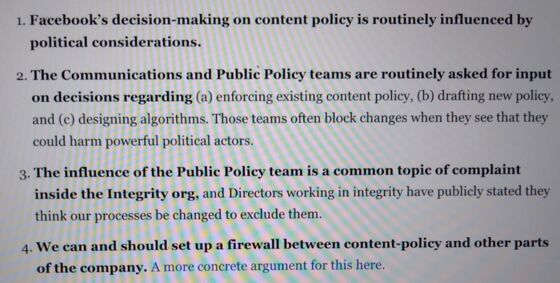

The leaked documents also reveal concerns from some employees that their Facebook colleagues who interact with elected officials have too much control over content decisions. Members of the Public Policy team, according to one December 2020 document, get to weigh in “when significant changes are made to our algorithms.”

“Facebook routinely makes decisions about algorithms based on input from Public Policy,” the document says. The Public Policy team members “commonly veto launches which have significant negative impacts on politically sensitive actors.”

The note argues for a firewall between Facebook employees with political roles and those who are designing the platform, comparing the company to a traditional media organization that separates its business and editorial divisions.

Osborne said the team that sets Facebook’s community standards seeks input not just from Public Policy, but also from the parts of the company responsible for operations, engineering, human rights, civil rights, safety and communications.

Recognition that Facebook exerts editorial control over content has fueled renewed calls in Washington for the company to be held accountable for business decisions -- executed by the platform’s automated processes -- that determine what content a consumer sees. Several bills have been introduced in Congress to chip away at the legal protections for online platforms known as Section 230 of a 1996 law.

Facebook has advocated for updated internet regulation, and Zuckerberg told Congress earlier this year that companies should only enjoy the Section 230 liability shield if they implement best practices for removing illegal content.

Haugen in her testimony before Congress highlighted the challenges with regulating content, which is hard to classify and often depends on context. She advocated for a different approach: to dial back algorithmic interventions and return to a more chronological user experience in which information would move at a slower pace.

“They have a hundred percent control over their algorithms,” Haugen told Congress. “Facebook should not get a free pass on choices it makes to prioritize growth and virality and reactiveness over public safety. They shouldn’t get a free pass on that because they’re paying for their profits right now with our safety.”

©2021 Bloomberg L.P.