Facebook Privately Worried About Hate Speech Spawning Violence

Facebook Privately Worried About Hate Speech Spawning Violence

(Bloomberg) -- When hundreds of advertisers halted spending on Facebook Inc. in July 2020 to protest hate speech on the social media site, top executives took a defensive public stance, saying the company was aggressively fighting racism on its networks.

“When we find hateful posts on Facebook and Instagram, we take a zero tolerance approach and remove them,” Vice President of Global Affairs Nick Clegg said at the time.

What Clegg didn’t mention was that Facebook’s own analysts fretted internally that the company wasn’t taking down enough hateful content — and that their platform might be inciting some of the violence that gripped Minneapolis during protests over the police-killing of George Floyd.

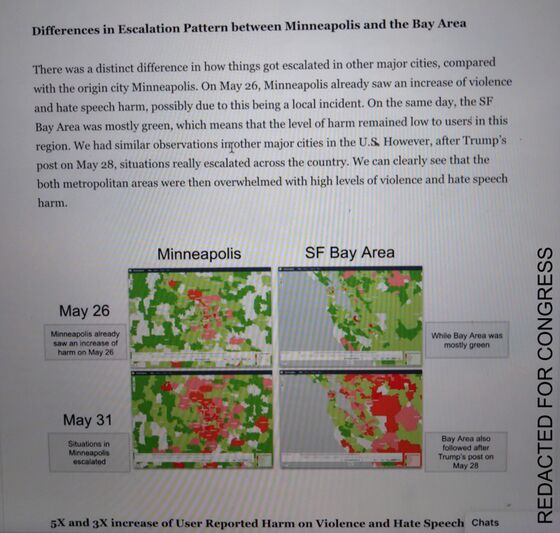

The Facebook analysts compiled nationwide user reports of violent and hateful content onto a color-coded heat map and discovered a startling trend: Hours after Floyd’s death, reports of offensive posts spiked in two Minneapolis ZIP codes that had seen the largest and most combative demonstrations, turning the area from green to varying shades of red. Across the rest of the U.S. where protests hadn’t yet emerged, the map was still mostly green, depicting only “sporadic” harmful content.

While the researchers cautioned that the pattern didn’t prove social media posts caused on-the-ground destruction, they wondered whether Facebook was doing enough to “limit the harm of violence and hate speech to users.” Facebook’s algorithms and content-moderation systems haven’t always prevented hateful speech from proliferating at critical times.

The concerns came to light in a trove of disclosures that former product manager Frances Haugen made to the U.S. Securities and Exchange Commission and were provided to Congress in redacted form by Haugen’s legal counsel. The redacted versions were reviewed by a consortium of news organizations, including Bloomberg. Research reports and company memos, some of which haven’t been reported previously, suggest that:

- Facebook executives have long known that its hate-speech problem was bigger and more entrenched than it disclosed publicly.

- While Facebook prioritizes rooting out violent and hateful content in English-speaking Western countries, it neglects developing regions that are more vulnerable to real-world harm from negativity on social media.

- Despite those struggles, Facebook sought ways to cut staffing costs at the division in charge of moderating such content.

Facebook’s policies governing user-posted content prohibit direct attacks against people on the basis of their race, country of origin, religion, sexual orientation and other sensitive attributes. That includes posts with dehumanizing language, suggestions of inferiority, harmful stereotypes or calls for segregation. In recent years, the company has expanded its policy to bar White separatism ideologies and any ads that claim particular groups are a threat to the safety or survival of others.

To catch hate speech, Facebook uses artificial intelligence systems to scan for images and text that look like they could violate its policies. Sometimes those systems automatically remove the offensive posts. Other times Facebook demotes suspected hate speech and sends it to human reviewers for a final decision.

Publicly, Facebook touts the success of its systems in detecting the vast majority of hate speech. The social media network says its AI-powered system detected 96.7% of all the hate speech it took action on during the first three months of this year, up from 38% in 2018. Chief Executive Officer Mark Zuckerberg told Congress in March that “more than 95% of the hate speech that we take down is done by an AI and not by a person.”

But some of Zuckerberg’s own employees warned that neither Facebook’s human reviewers nor its automated systems were catching all or even most of the hateful content. In practice, the company was only removing 5% or less of hate speech, the documents suggest.

Unless there’s a major change in strategy, getting that number beyond 10% to 20% would be “very difficult,” said one employee whose identity was redacted.

In defending the implementation of Facebook’s policies, spokesman Andy Stone said that the statistic cited refers to hate speech that is automatically removed and doesn’t include posts that are demoted or taken down after human review. But the company declined to say what percentage of hate is demoted. Stone also said that hate speech represents well under 1% of overall content on its platform and is declining.

“We think focusing on prevalence, the amount of hate speech people actually see on the platform — and how we reduce it using all of our tools — is the most important measure,” Guy Rosen, Facebook’s vice president of integrity, said in a blog post earlier this month. “Focusing just on content removals is the wrong way to look at how we fight hate speech.”

In a separate blog post over the weekend, Facebook said it adopted a strategy in 2018 to monitor and remove content that violates its policies, especially in countries most at risk of offline violence. The company said it evaluates factors such as social tensions and civic participation.

Hate speech is notoriously difficult for social media companies to handle, in part because AI systems have trouble evaluating a speaker’s intent. Offensive language in one country might mean something completely different in another region. Words meant to offend Black or gay people might be considered innocent when used by members of those communities.

And conservatives and liberals often define hate speech differently on certain hot-button issues, such as the rights of transgender people. Tech companies need people who understand the languages and cultural nuances of different regions to help train those systems accurately.

Even among Facebook’s more than 60,000 employees, there are divisions over what conclusions to draw from research — and what actions should be taken. Some staffers may believe a particular country’s election needs more or less moderation, but that may conflict with the views of the public-policy team, which is weighing the political ramifications.

“We’ve made significant investments in people and technology to keep our platform safe, and have made fighting misinformation and providing people with reliable information a priority,” Stone said. “If any research had identified an exact solution to these complex challenges, the tech industry, governments and society would have solved them a long time ago. We take a comprehensive approach to address hate speech and while we have more work to do, we remain committed to getting this right.”

Documents show Facebook knows the same strategies it deploys to keep its 2.9 billion users coming back every month are also what makes hate speech ripple across its networks so quickly. In 2018, Facebook started showing users more “meaningful” posts from friends and family — rather than content from brands and publishers — in order to drive more engagement.

That meant a boost for posts about newborns and weddings but also for content that elicits strong emotions, such as misinformation and hate. “Research has shown how outrage and misinformation are more likely to be viral,” one employee wrote in 2019.

The Wall Street Journal, in its series “The Facebook Files,” previously reported on the company’s practices and the documents shared by Haugen.

Facebook already knows which people and groups are most likely to spread prejudice, but those same users often manage to evade enforcement action, the records show. Many users in the U.S. who are serial hate-speech offenders also regularly share misinformation, and 99% of them remain active on the site, according to one internal report dated August 2020. They often join like-minded groups that promote lies and bias but are rarely reported for breaking the rules by their fellow group members. Civic groups often can grow very quickly, sometimes to hundreds of thousands of members in a matter of days.

In fact, Facebook rated 70% of the top 100 most active civic groups in the U.S. as “unrecommendable” because they share too much offensive content, according to another internal report.

The problem is even bigger overseas. Facebook ranks hate speech as its fourth-most time-consuming issue but allocates few resources to countries outside the Western world, the documents show. The company spent nearly 60% of hate-speech training time on just seven languages, with English representing a quarter, one report said. That leaves some of the world’s most vulnerable places in a digital free-for-all.

In the documents, the company cited a study conducted by researchers at Princeton University and the University of Warwick. It found that in Germany, “anti-refugee hate crimes increase in areas with higher Facebook usage.” The study largely ruled out the possibility that increased hate speech on the platform simply correlated with, but did not cause, an uptick in real-world violence.

After looking at periods when Facebook or the internet had an outage, the researchers found that anti-refugee crimes dropped. One of the researchers, Karsten Müller, explained that language on Facebook can serve as a “trigger factor” for people potentially prone to committing hate crimes. “This can push some of these perpetrators over the edge to go carry out such crimes,” he said.

In Afghanistan, where the company has 5 million users every month, researchers found that hate speech is the second-most-reported abuse category on its system, after bullying and harassment. Yet a review showed that Facebook only acted on 0.23% of hate speech.

But rather than spending more money on moderators, Facebook did the opposite. In 2019, the company shifted spending to rely more on AI and less on workers stationed around the world. At the time, evaluating hate speech claims had cost Facebook about $2 million a week, or $104 million a year. A company report from that year about making hate-speech reviews “more efficient” said that spending had begun to add up to “real money.” Facebook generated $70.7 billion in revenue in 2019.

Stone said that using human moderators is tough to scale up enough to address the problem, and that its overall budget for fighting hate speech remained steady that year.

Facebook’s hate-speech strategy was put to the test during the summer of civil unrest over Floyd’s murder. A few days after the killing, President Donald Trump lambasted Minneapolis on social media for not quelling the public demonstrations and threatened to send in the National Guard. “When the looting starts, the shooting starts,” he wrote.

Facebook systems were almost 90% certain that Trump’s post broke its violence and incitement policy, according to the documents, and typically content that passes that threshold is automatically removed. But Trump was considered one of the many public figures who only face consequences over their comments if people at the company decide to take action themselves.

In June 2020, civil-rights groups met privately with Zuckerberg to urge him to take down more of Trump’s posts, including the “looters and shooters” comment. Rashad Robinson, president of Color of Change, argued that the comment could invite violence. Zuckerberg defended the post, saying Trump was merely explaining potential government action, according to Robinson.

“I was like, ‘This was dog whistling and we know this is dog whistling,’” he said.

The president’s comments ended up attracting a deluge of hateful and violent comments, such as, “Bullets work!” and “Start shooting these thugs.” After Trump’s post, according to the internal study with the heat map, reports of hateful and violent content saw a fivefold increase in some places.

By the hour, Facebook researchers saw the U.S. turn from green to light red. The map went blood-red in pockets where the protests were particularly intense.

“At the end of June 2,” the document said. “We can see clearly that the entire country was basically ‘on fire.’”

©2021 Bloomberg L.P.