For Tesla, Facebook and Others, AI’s Flaws Are Getting Harder to Ignore

(Bloomberg Opinion) -- What do Facebook Inc. co-founder Mark Zuckerberg and Tesla Inc. Chief Executive Elon Musk have in common? Both are grappling with big problems that stem, at least in part, from putting faith in artificial intelligence systems that have underdelivered. Zuckerberg is dealing with algorithms that are failing to stop the spread of harmful content; Musk with software that has yet to drive a car in the ways he has frequently promised.

There is one lesson to be gleaned from their experiences: AI is not yet ready for prime time. Furthermore, it is hard to know when it will be. Companies should consider focusing on cultivating high-quality data — lots of it — and hiring people to do the work that AI is not ready to do.

Designed to loosely emulate the human brain, deep-learning AI systems can spot tumors, drive cars and write text, showing spectacular results in a lab setting. But therein lies the catch. When it comes to using the technology in the unpredictable real world, AI sometimes falls short. That’s worrying when it is touted for use in high-stakes applications like healthcare.

The stakes are also dangerously high for social media, where content can influence elections and fuel mental-health disorders, as revealed in a recent expose of internal documents from a whistleblower. But Facebook’s faith in AI is clear on its own site, where it often highlights machine-learning algorithms before mentioning its army of content moderators. Zuckerberg also told Congress in 2018 that AI tools would be “the scalable way” to identify harmful content. Those tools do a good job at spotting nudity and terrorist-related content, but they still struggle to stop misinformation from propagating.

The problem is that human language is constantly changing. Anti-vaccine campaigners use tricks like typing “va((ine” to avoid detection, while private gun-sellers post pictures of empty cases on Facebook Marketplace with a description to “PM me.” These fool the systems designed to stop rule-breaking content, and to make matters worse, the AI often recommends that content too.

Little wonder that the roughly 15,000 content moderators hired to support Facebook’s algorithms are overworked. Last year a New York University Stern School of Business study recommended that Facebook double those workers to 30,000 to monitor posts properly if AI isn’t up to the task. Cathy O’Neil, author of Weapons of Math Destruction has said point blank that Facebook’s AI “doesn’t work.” Zuckerberg for his part, has told lawmakers that it’s difficult for AI to moderate posts because of the nuances of speech.

Musk’s overpromising of AI is practically legendary. In 2019 he told Tesla investors that he “felt very confident” there would be one million Model 3 on the streets as driverless robotaxis. His timeframe: 2020. Instead, Tesla customers currently have the privilege of paying $10,000 for special software that will, one day (or who knows?) deliver fully-autonomous driving capabilities. Till then, the cars can park, change lanes and drive onto the highway by themselves with the occasional serious mistake. Musk recently conceded in a tweet that generalized self-driving technology was “a hard problem.”

More surprising: AI has also been falling short in healthcare, an area which has held some of the most promise for the technology. Earlier this year a study in Nature analyzed dozens of machine-learning models designed to detect signs of COVID-19 in X-rays and CT scans. It found that none could be used in a clinical setting due to various flaws. Another study published last month in the British Medical Journal found that 94% of AI systems that scanned for signs of breast cancer were less accurate than the analysis of a single radiologist. “There’s been a lot of hype that [AI scanning in radiology] is imminent, but the hype got ahead of the results somewhat,” says Sian Taylor-Phillips, a professor of population health at Warwick University who also ran the study.

Government advisors will draw from her results to decide if such AI systems are doing more good than harm and thus ready for use. In this case, the harm doesn’t seem obvious. After all, AI-powered systems for spotting breast cancer are designed to be overly cautious, and are much more likely to give false alarms than miss signs of a tumor. But even a tiny percentage increase in the recall rate for breast cancer screening, which is 9% in the U.S. and 4% in the U.K., means increased anxiety for thousands of women from false alarms. “That is saying we accept harm for women screened just so we can implement new tech,” says Taylor-Phillips.

It seems the errors are not confined to just a few studies. “A few years ago there was a lot of promise and a lot of hype about AI being a first pass for radiology,” says Kathleen Walch, managing partner at AI market intelligence firm Cognilytica. “What we’ve started to see is the AI is not detecting these anomalies at any sort of rate that would be helpful.”

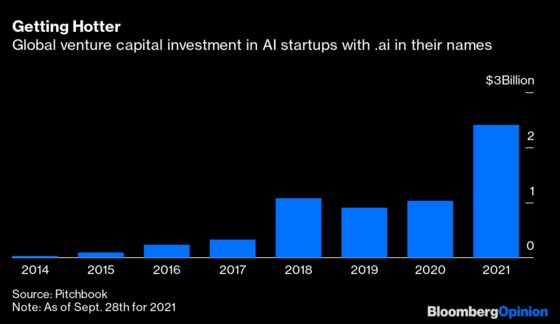

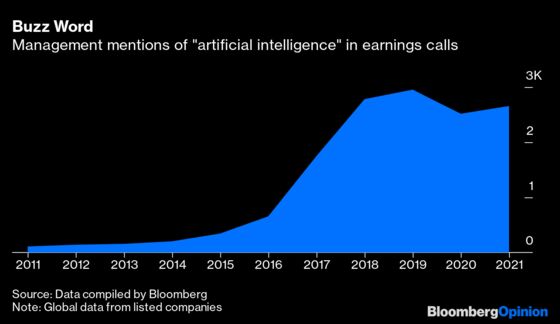

Still, none of these red flags have stopped a flood of money going into AI. Global venture capital investment into AI startups has soared in the past year following a steady climb, according to PitchBook Data Inc., which tracks private capital markets. Mentions of “artificial intelligence” in corporate earnings calls have climbed steadily over the past decade and refused to die down, according to an analysis of transcripts by Bloomberg.

With all this investment, why isn’t AI where we hoped it would be? Part of the problem is the puffery of technology marketing. But AI scientists themselves may also be partly to blame.

The systems rely on two things: a functioning model, and underlying data to train that model. To build good AI, programmers need to spend the vast majority of their time, perhaps around 90%, on the data — collecting it, categorizing it and cleaning it. It is dull and difficult work. It is arguably also neglected by the machine-learning community today, as scientists put more value and prestige on the intricacies of AI architectures, or how elaborate a model is.

One result: The most popular datasets used for building AI systems, such as computer vision and language processing, are filled with errors, according to a recent study by scientists at MIT. A cultural focus on elaborate model-building is, in effect, holding AI back.

But there are encouraging signs of change. Scientists at Alphabet Inc.’s Google recently complained about the model versus data problem in a conference paper, and suggested ways to create more incentives to fix it.

Businesses are also shifting their focus away from “AI-as-a-service” vendors who promise to carry out tasks straight out of the box, like magic. Instead, they are spending more money on data-preparation software, according to Brendan Burke, a senior analyst at PitchBook. He says that pure-play AI companies like Palantir Technologies Inc. and C3.ai Inc. “have achieved less-than-outstanding outcomes,” while data science companies like Databricks Inc. “are achieving higher valuations and superior outcomes.”

It’s fine for AI to occasionally mess up in low-stakes scenarios like movie recommendations or unlocking a smartphone with your face. But in areas like healthcare and social media content, it still needs more training and better data. Rather than try and make AI work today, businesses need to lay the groundwork with data and people to make it work in the (hopefully) not-too-distant future.

Facebook’s most recent quarterly report on its content moderation efforts said that it had extremely low incidents of nudity, but it could not estimate how prevalent bullying and harassment was on the site.

This column does not necessarily reflect the opinion of the editorial board or Bloomberg LP and its owners.

Parmy Olson is a Bloomberg Opinion columnist covering technology. She previously reported for the Wall Street Journal and Forbes and is the author of "We Are Anonymous."

©2021 Bloomberg L.P.