Racist Emojis Are the Latest Test for Facebook, Twitter Moderators

Racist Emojis Are the Latest Test for Facebook, Twitter Moderators

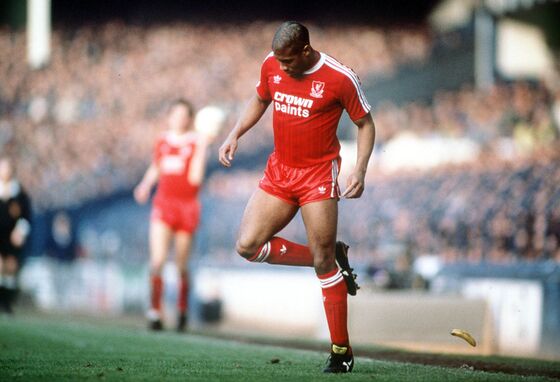

(Bloomberg Businessweek) -- In a soccer game in Liverpool’s Goodison Park in 1988, player John Barnes stepped away from his position and used the back of his heel to kick away a banana that had been thrown toward him. Captured in an iconic photo, the moment encapsulated the racial abuse that Black soccer players then faced in the U.K.

More than 30 years later, the medium has changed, yet the racism persists: After England lost to Italy this July in the final of the UEFA European Championship, Black players for the British side faced an onslaught of bananas. Instead of physical fruit, these were emojis slung at their social media profiles, along with monkeys and other imagery. “The impact was as deep and as meaningful as when it was actual bananas,” says Simone Pound, director of equality, diversity, and inclusion for the U.K.’s Professional Footballers’ Association.

Facebook Inc. and Twitter Inc. faced wide criticism for taking too long to screen out the wave of racist abuse during this summer’s European championship. The moment highlighted a long-standing issue: Despite spending years developing algorithms to analyze harmful language, social media companies often don’t have effective strategies for stopping the spread of hate speech, misinformation, and other problematic content on their platforms.

Emojis have emerged as a stumbling block. When Apple Inc. introduced emojis with different skin tones in 2015, the tech giant came under criticism for enabling racist commentary. A year later Indonesia’s government drew complaints after it demanded social networks remove LGBTQ-related emojis. Some emojis, including the one depicting a bag of money, have been linked to anti-Semitism. Black soccer players have been frequently targeted: The Professional Footballers’ Association and data science company Signify conducted a study last year of racially abusive tweets directed at players and found that 29% included some form of emoji.

Over the past decade, the roughly 3,000 pictographs that constitute emoji language have been a vital part of online communication. Today it’s hard to imagine a text message conversation without them. The ambiguity that is part of their charm doesn’t come without problems, though. A winking face can indicate a joke or a flirtation. Courts end up debating issues such as whether it counts as a threat to send someone an emoji of a pistol.

This matter is confusing to human lawyers, but it’s even more confounding for computer-based language models. Some of these algorithms are trained on databases that contain few emojis, says Hannah Rose Kirk, a doctoral researcher at the Oxford Internet Institute. These models treat emojis as new characters, meaning the algorithms must start from scratch in analyzing their meaning based on context.

“It’s a new emerging trend, so people are not aware of it as much, and the models lag behind humans,” says Lucy Vasserman, who’s the engineering manager for a team at Google’s Jigsaw, which develops algorithms to flag abusive speech online. What matters is “how frequently they appear in your test and training data.” Her team is working on two new projects that could improve analysis on emojis, one that involves mining vast amounts of data to understand trends in language, and another that factors in uncertainty.

Tech companies have cited technical complexity to obscure more straightforward solutions to many of the most common abuses, according to critics. “Most usage isn’t ambiguous,” says Matthew Williams, director of Cardiff University’s HateLab. “We need not just better AI going forward but bigger and better moderation teams.”

Emoji use has been underanalyzed relative to its importance to modern online communication, Kirk says. She found her way to studying the pictographs after earlier work on memes. “The thing we found really puzzling as researchers was, why are Twitter and Instagram and Google’s solutions not better at emoji-based hate?” she says.

Frustrated by the poor performance of existing algorithms at detecting threatening use of emojis, Kirk built her own model, using humans to help teach the algorithms to understand emojis rather than leaving software to learn on its own. The result, she says, was far more accurate than the original algorithms developed by Jigsaw and other academics that her team tested. “We demonstrated, with relatively low effort and relatively few examples, you can very effectively teach emojis,” she says.

Mixing humans with tech, along with simplifying the approach to moderating speech, has also been a winning formula for startup Respondology in Boulder, Colo., which offers its screening tools across Nascar, the NBA, and the NFL. It works with the Detroit Pistons, Denver Broncos, and leading English soccer teams. Rather than relying on a complicated algorithm, the company allows teams to hide comments that include certain phrases and emojis with a blanket screen. “Every single client that comes to us, particularly the sport clients—leagues, teams, clubs, athletes—all ask about emojis in the first conversation,” says Erik Swain, Respondology’s president. “You almost don’t need AI training of your software to do it.”

Facebook acknowledges that it told users incorrectly that certain emoji use during the UEFA European Championship this summer didn’t violate its policies when in fact it did. It says it’s begun automatically blocking certain strings of emojis associated with abusive speech, and it also allows users to specify which emojis they don’t want to see. Twitter said in a statement that its rules against abusive posts include hateful imagery and emojis.

These actions may not be sufficient to quell critics. Professional athletes speaking out about the racist abuse they face has become yet another factor in the broader march toward potential government regulation of social media. “We’ve all got hand-wringing and regret, but they didn’t do anything, which is why we have to legislate,” says Damian Collins, a U.K. Parliament member who’s leading work on an online safety bill. “If people that have an interest in generating harmful content can see that the platforms are particularly ineffective at spotting the use of emojis, then we will see more and more emojis being used in that context.” —With Adeola Eribake

Read next: Crypto Investors Target Pro Baseball, Basketball, Wrestling to Reach More Fans

©2021 Bloomberg L.P.