Tech’s Shadow Workforce Sidelined, Leaving Social Media to the Machines

Tech’s Shadow Workforce Sidelined, Leaving Social Media to the Machines

(Bloomberg) -- The Covid-19 pandemic has disrupted the sprawling networks of contract workers who keep social-media services running smoothly. Software is picking up the slack, but Facebook Inc. and YouTube are already warning there’ll be less content moderation and slower customer support.

While tech platforms believe artificial intelligence software will ultimately reduce the need for human oversight, many experts think the technology is not yet ready to take on nuanced decision-making required for tasks like content moderation. “Now it's going to be really obvious to everyone how poorly they actually work,” said Hannah Bloch-Wehba, a law professor at Drexel University who studies internet governance.

Facebook has about 15,000 contract workers policing its platform. While full-time employees log on remotely, the company won’t let contractors who filter disturbing content work from home, citing privacy concerns and legal considerations. Many are employed through staffing firms such as Accenture Plc, which recently sent some Silicon Valley-based workers home to comply with a shelter-in-place order. One moderator who asked not to be named for fear of retribution said he was told the cuts would last at least three weeks. As he left the office on March 16, he wondered if he’d ever return. Accenture didn’t respond to a request for comment.

Read more: Inside Silicon Valley’s Shadow Workforce

The following day, Facebook users began complaining that their posts were being removed at curiously high rates. Jodi Rudoren, editor-in-chief of The Forward, complained on Twitter that her newspaper’s links were disappearing, cutting traffic to its website. Within hours, Facebook restored many of the links. The company blamed a glitch in its spam-moderation software, and executive Guy Rosen said it was “unrelated to any changes in our content moderator workforce.”

Still, the incident undermined confidence in Facebook’s technology at a time when the company is relying more on automation. “I posted a scientific article about COVID spread and it was censored. That is some Orwellian Machine Learning you have there,” genomics scientist Kevin McKernan wrote on Twitter. “If you censor COVID, I’m off the platform forever.”

Facebook Chief Executive Officer Mark Zuckerberg told reporters the next day that shortages of contract workers will mean less content moderation. With fewer people to make difficult judgment calls on what content to keep up, the company is prioritizing the most-urgent cases, such as posts recommending people drink bleach to cure Covid-19. Other problem areas, such as “back-and-forth accusations a candidate might make in an election,” will get less attention, he said.

The largest U.S. internet gatekeepers have come under immense criticism in recent years for spreading toxic content and misinformation. In response, they hired thousands of contractors who work alongside software to filter out the dross. The novel coronavirus is forcing the companies to re-assess that combination.

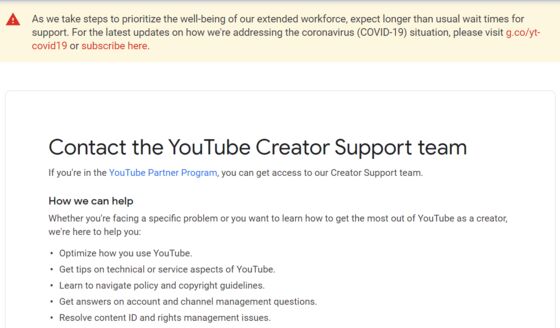

Google, which has more than 10,000 contractors, recently said it would temporarily increase the use of automated content moderation on its services, including YouTube. The internet's largest video site warned this would mean more videos will be removed. Creators can challenge these automated decisions, but YouTube said “workforce precautions will also result in delayed appeals reviews." There was a similar message at the top of the YouTube Creator Support website on March 26.

Much of the public debate over content moderation has centered on social and political issues, as when YouTube's AI systems labeled footage of the Notre Dame cathedral fire a conspiracy theory last year. But it can also hurt creators who rely on YouTube and similar services like Instagram for income. YouTube's automated decisions about advertising, for instance, have sparked outrage from its stars for years.

As global Covid-19 cases surged earlier this month, YouTube's systems automatically pulled ads from videos that mentioned the virus in an effort to curb misinformation. After creators protested, CEO Susan Wojcicki announced changes to the company’s guidelines to allow ads on a limited number of video channels that go through a formal approval process. “Sometimes these automated systems can make mistakes, which we know can be frustrating,” a YouTube creator support representative said in a video describing the certification procedure.

On Tuesday, Forrest Starling, who runs the popular gaming YouTube channel KreekCraft, alerted YouTube that far fewer people were visiting his channel from the service’s search engine. He couldn’t get an explanation from the company, but suspected an algorithmic mishap. "It’s a very weird time in the world right now and I understand everyone there is doing the absolute best they can," he wrote in an email. "It’s just frustrating when issues like this pop up."

The day after, YouTube issued a statement attributing problems like Starling’s to Covid-19 related staff shortages. “Content may not show in search, etc. until a reviewer takes a look, & w/fewer reviewers available, this is taking longer,” the company wrote on Twitter. "Completely understandable considering the circumstances," Starling said afterward.

The staffing challenges for tech companies may get worse before they get better. On March 24, the government of India ordered all 1.3 billion of its citizens to stay in their homes for 21 days. The country is a significant source of remote technical and support staff for U.S. tech companies.

A YouTube spokesman described the staffing shifts as a temporary response to virus-related disruptions. Facebook’s Zuckerberg hasn’t been shy about his long-term vision of a content-review regime that relies far more on machines than humans. This holds appeal because content moderation is labor intensive and brutal, with some workers complaining of trauma from exposure to disturbing content.

Regardless of the long-term prospects, people like Starling see troubling signs. On Thursday, he sent a tweet from KreekCraft’s Twitter page: “Well I woke up and YouTube crashed,” he said. He added an emoji of a face sneezing.

©2020 Bloomberg L.P.